Overview

I like the Self-Tracking templates that come with the latest release of the Entity Framework in VS2010 RC. If you haven't read much about it before, have a look at the

ADO .NET team blog.

In this post I'll share with you a customised version of the VS2010 RC Self-Tracking framework that I've built in my spare time and, although it is largely based on the Entity Framework Self-Tracking feature in VS2010 RC, it also contains a fair amount of enhancement. Specifically, this framework:

- Provides support for Code Only Self-Tracking entities and complex types that does not involve the EF designer. Don't get me wrong; I like the designer; but it doesn't scale well once you have more than a handful of classes. I guess one could possibly modify/tweak the existing templates so you can split your domain model in different edmx files - but I wanted a pure code-only approach...so instead I've created my own T4 template that uses your domain object classes as the source of its generation.

- Demonstrates how to implement a persistent ignorant data access layer for EF. Specifically, I expose IUnitOfWork, ISession and IRepository interfaces and register my EF-specific implementations via an IoC container - in this case Unity.

- Includes a few minor tweaks and bug fixes to improve common usage scenarios with Self-Tracking entities...more about this later.

- Shows how to use the self-tracking framework via the included sample domain model and unit tests.

Note: I haven't incorporated WCF usage in the sample provided here - however I already have used it in this context with great success...perhaps I will blog about this in future…

Before I begin, I just want to re-iterate that the code directly related to implementing self-tracking support is primarily a copy/paste job from the code generated by the Self-Tracking templates feature of the VS2010 RC release. I have however wrapped most of the generated code into methods that can be succinctly invoked from your domain objects. Most of the work I’ve done was involved in the creation of a new T4 code generation template as well as making the data access layer persistent ignorant.

I'd also like to mention that I found the following

resource quite helpful when I started out with EF4 - especially the bits regarding mapping Poco objects and repository implementation for loading navigation properties - its certainly worth a read if you’re new to EF4.

Without further ado, lets get started and see how to use this framework.

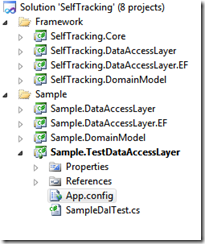

Getting Started: The Sample Project

The image below shows the project structure.

The following provides more detail on the individual framework projects:

- SelfTracking.Core. This project provides our IoC implementation (via Unity). It also contains a helper method to make validation with the Enterprise Library Validation block a bit easier when the type of the object being validated is not known at compile time.

- SelfTracking.DataAccessLayer contains the interfaces for our persistence ignorant data access layer.

- SelfTracking.DataAccessLayer.EF is our Entity Framework-specific implementation of the Data Access Layer interfaces. There’s also a repository implementation for non-self-tracking entities.

- SelfTracking.DomainModel contains the Self-Tracking framework. It also contains the T4 template you should copy to your own project and some code snippets you may find useful when writing your domain objects and/or properties.

The Sample projects demonstrate usage of this self-tracking framework by introducing a very simple domain model that includes one-to-many & one-to-one associations as well as a complex object.

- Sample.DataAccessLayer introduces the IStandardTrackableObjectRepository interface for our sample project. I’ve chosen to only use one repository interface for this domain as it more than suffices – however larger projects will most likely require more.

- Sample.DataAccessLayer.EF contains the EF implementation of IStandardTrackableObjectRepository, mappings for our domain objects as well as a registrar class to assist with mapping/registration of our domain objects with our IoC (Inversion of Control) container.

- Sample.DomainModel contains our simple domain model.

- Sample.TestDataAccessLayer contains a few unit tests to keep me honest…mmm, since I'm talking about being honest here...these are actually integration tests, not unit tests - either way, you'll get some insight on how this all fits together by looking at these.

The example domain is depicted below - a few important points are worth mentioning:

- There’s a many to many relationship between Company and CompanyDivision (e.g. “Marketing”, “Accounting”…). Notice how this is mapped as two one-to-many relationships rather than a single many-to-many relationship. This is deliberate and I would strongly encourage you do the same in your projects. Basically, one often needs to store additional data as part of a many-to-many relationship (e.g. “created by user” or “is active” if you do logical deletes) – this can’t be achieved via a single many-to-many mapping. Even if you do not initially think that you’ll need to store additional data with this type of relationship, you could be in for a bit of rework later when you need to change it. But don’t just take my word for it…NHibernate in Action gives a much better and more lengthy discussion on this matter.

- Each Company can have a CEO (0-1 multiplicity)

- Each Employee must be employed by a company, and each company can employ many employees (one-to-many)

- For demonstration purposes, Address is a deliberate (bad) example of a complex object. Typically you’d make this an entity instead so that you can have Foreign keys for things like State and PostCode (i.e. Zip code for folks in the US)

Using the T4 Template

When creating a new domain model project, first copy the

TrackableObjectCodeGen.tt T4 template file to your new project. Also ensure that you specify

TextTemplatingFileGenerator for the

Custom Tool property in the properties window for this file. You’ll also have to manually trigger the execution of this file whenever you modify your domain projects – however you can also get it to automatically generate code every time you

build your project.

I’ll use the Employee class from the sample domain project as an example as it contains quite a few different types of properties. The following listing shows the code – i.e. this contains the code you have to write – not the code that’s generated.

Code Snippet

- using System;

- using System.Collections.Generic;

- using System.Linq;

- using System.Runtime.Serialization;

- using System.Text;

- using Microsoft.Practices.EnterpriseLibrary.Validation.Validators;

- using Sample.DomainModel.Common;

- using SelfTracking.DomainModel;

- using SelfTracking.DomainModel.TrackableObject;

-

- namespace Sample.DomainModel.Company

- {

- [Serializable]

- [DataContract(IsReference = true), KnownType(typeof(Address)), KnownType(typeof(Company))]

- public partial class Employee : ActiveBasicObject, IConcurrencyObject

- {

- #region Fields

- public const int MaxNameLength = 20;

- private Address _HomeAddress;

- private bool _HomeAddressInitialised;

- private string _FirstName;

- private string _MiddleName;

- private string _LastName;

- private Company _CeoOfCompany;

- private Company _EmployedBy;

- private byte[] _concurrencyToken;

- #endregion

-

- #region Primitive Properties

- [DataMember]

- [StringLengthValidator(1, MaxNameLength)]

- public string FirstName

- {

- get { return PrimitiveGet(_FirstName); }

- set { PrimitiveSet("FirstName", ref _FirstName, value); }

- }

-

- [DataMember]

- [IgnoreNulls]

- [StringLengthValidator(1, MaxNameLength)]

- public string MiddleName

- {

- get { return PrimitiveGet(_MiddleName); }

- set { PrimitiveSet("MiddleName", ref _MiddleName, value); }

- }

-

- [DataMember]

- [StringLengthValidator(1, MaxNameLength)]

- public string LastName

- {

- get { return PrimitiveGet(_LastName); }

- set { PrimitiveSet("LastName", ref _LastName, value); }

- }

-

- /// <summary>

- /// Gets or sets the concurrency token that is used in optimistic concurrency checks

- /// (e.g. typically maps to timestamp column in sql server databases)

- /// </summary>

- /// <value>The concurrency token.</value>

- [DataMember]

- public byte[] ConcurrencyToken

- {

- get { return PrimitiveGet(_concurrencyToken); }

- set

- {

- // no point using a "proper" equality comparer here as this field is auto calculated and typically only be set by the persistance layer

- PrimitiveSet("ConcurrencyToken", ref _concurrencyToken, value, (a, b) => false);

- }

- }

-

- #endregion

-

- #region Complex Properties

- [DataMember]

- [ObjectValidator]

- public Address HomeAddress

- {

- get { return ComplexGet(ref _HomeAddress, ref _HomeAddressInitialised, HandleHomeAddressChanging); }

- set

- {

- ComplexSet("HomeAddress", ref _HomeAddress, value,

- ref _HomeAddressInitialised, HandleHomeAddressChanging);

- }

- }

- #endregion

-

- #region Navigation Properties

- /// <summary>

- /// If this employee is a CEO of a company, this property navigates to the associated company.

- /// </summary>

- /// <value>The ceo of company.</value>

- [DataMember, Persist("Company-Ceo")]

- public Company CeoOfCompany

- {

- get { return OneToOneNavigationGet(_CeoOfCompany); }

- set

- {

- FromChildOneToOneNavigationSet("CeoOfCompany", ref _CeoOfCompany, value,

- root => root.Ceo, (root, newValue) => root.Ceo = newValue, true);

- }

- }

-

- /// <summary>

- /// The company that currently employs this employee - mandatory (otherwise this isn't an employee!)

- /// </summary>

- /// <value>The employed by.</value>

- [DataMember, Persist("Company-Employees")]

- [NotNullValidator]

- public Company EmployedBy

- {

- get { return ManyToOneNavigationGet(ref _EmployedBy); }

- set

- {

- ManyToOneNavigationSet("EmployedBy", ref _EmployedBy, value, oneside => oneside.Employees);

- }

- }

-

- #endregion

- }

- }

This class inherits from

ActiveBasicObject – although typically you would only need to derive from the

TrackableObject class. I’ve used

ActiveBasicObject as an easy way to provide the majority of my entities with an

Id and

IsActive Boolean property (for logical deletes).

Undoubtedly, all those Primitive/ManyToOne/Get/Set methods in the property implementations are going to look strange to you. These methods serve 2 important purposes:

- It instructs the T4 template what type of property it is so that it can generate the right code (the T4 template inspects the setter property body implementations for the presence of these methods).

- They contain all the code that is necessary for Self-Tracking to function – including raising property changed events and ensuring that navigation properties on both sides of the association are kept in sync. Basically, these methods contain the code as copied from the Self-Tracking VS2010 RC release – however they’ve been cleaned up a bit and neatly wrapped into one liner methods. Aside: in most cases, the getter methods don’t really do anything other than returning the supplied field value. As such, they aren’t really required and the template will continue to function without them. Nonetheless, I think this adds a nice symmetry to the property implementations by having both get/set methods – beside, you could extend the getter methods to implement some of your own functionality (aka similar to what one would do with Aspect Oriented Programming).

When running the T4 template, apart from generating a new corresponding partial class with the required self-tracking supporting code, the T4 template

may also make a few minor changes to your original domain object class – this is certainly not something you’d normally want to do with code generation…and before you get all excited and scream foul play, consider that it will:

- Make your domain object class partial, if it isn’t already;

- Add any missing KnownType attributes at the top of your class for all the distinct reference and DateTimeOffset persistent property types contained in your self-tracking class. For example, if you have a Person property in a class X, and you also have a Customer class that derives from Person, both Customer and Person KnownType attributes will be added to class X. That said, due to the limitations of EnvDTE (which I used to drive the code gen), the T4 template will ignore interfaces. If however you consider the task of manually decorating your classes with KnownType attributes as one of the true pleasures of life, then by all means don’t let me stop you from having fun - simply set the AddMissingKnownTypeAttributes global parameter in the T4 template to false.

- Notify you via warnings in the VS studio error list pane if it made any of the abovementioned changes to your classes.

In addition, you most likely also noticed the

Persist attributes. In short, the T4 template would have a really hard time determining the pair of properties in the 2 different classes that partake in the same one-to-one and one-to-many association without these attributes. The text in the attribute contains the name of the association and the T4 template will notify you via errors if it is unable to match association properties due to incorrectly used/missing Persist attributes. Finally, I’ve also decorated the properties with attributes from the Enterprise library Validation block – use of these are completely optional – feel free to use whatever framework/mechanism you prefer.

The following code block shows the other end of the “Company-Employees” association that lives in the

Company class. Note the use of the

OneToManyNavigationGet/Set properties instead of

ManyToOneNavigationGet/Set. An important thing to realise here too is that the FixupEmployees method is generated by the T4 template – it MUST be called

FixupMyPropertyName although feel free to modify/enhance the T4 template if you’re not happy with this restriction. In addition, obviously you’re going to have a compile error unless you first trigger the t4 template to generate code. I’ll stress it again…

don’t forget to regenerate your code before you compile.

Code Snippet

- [DataMember, Persist("Company-Employees")]

- public TrackableCollection<Employee> Employees

- {

- get

- {

- return OneToManyNavigationGet(ref _Employees, FixupEmployees);

- }

- private set

- {

- OneToManyNavigationSet("Employees", ref _Employees, value, FixupEmployees);

- }

- }

The following table provides examples of the different property types supported by this self-tracking framework.

Property Type | Code Snippet shortcut |

Primitive Property

(code snippet: tpropp) | - [DataMember]

- public string FirstName

- {

- get { return PrimitiveGet(_FirstName); }

- set { PrimitiveSet("FirstName", ref _FirstName, value); }

- }

|

Complex Object Property

(code snippet: tpropc) | - private Address _HomeAddress;

- private bool _HomeAddressInitialised;

-

- [DataMember]

- public Address HomeAddress

- {

- get

- {

- return ComplexGet(ref _HomeAddress,

- ref _HomeAddressInitialised, HandleHomeAddressChanging);

- }

- set

- {

- ComplexSet("HomeAddress", ref _HomeAddress, value,

- ref _HomeAddressInitialised, HandleHomeAddressChanging);

- }

- }

|

One to Many

(code snippet: tpropotm) | - private TrackableCollection<Employee> _Employees;

-

- [DataMember, Persist("Company-Employees")]

- public TrackableCollection<Employee> Employees

- {

- get

- {

- return OneToManyNavigationGet(ref _Employees, FixupEmployees);

- }

- private set

- {

- OneToManyNavigationSet("Employees", ref _Employees,

- value, FixupEmployees);

- }

- }

|

Many to One

(code snippet: tpropmto) | - private Company _EmployedBy;

-

- [DataMember, Persist("Company-Employees")]

- public Company EmployedBy

- {

- get { return ManyToOneNavigationGet(ref _EmployedBy); }

- set

- {

- ManyToOneNavigationSet("EmployedBy", ref _EmployedBy,

- value, oneside => oneside.Employees);

- }

- }

|

Root One to One (use in class considered Primary/Root in the association)

(code snippet: tproproto) | - private Employee _Ceo;

-

- [DataMember, Persist("Company-Ceo")]

- public Employee Ceo

- {

- get { return OneToOneNavigationGet(_Ceo); }

- set

- {

- FromRootOneToOneNavigationSet("Ceo", ref _Ceo, value,

- child => child.CeoOfCompany,

- (child, newValue) => child.CeoOfCompany = newValue);

- }

- }

|

Child One to One (use in class considered the “Child” in the association)

(code snippet: tpropcoto) | - private Company _CeoOfCompany;

-

- [DataMember, Persist("Company-Ceo")]

- public Company CeoOfCompany

- {

- get { return OneToOneNavigationGet(_CeoOfCompany); }

- set

- {

- FromChildOneToOneNavigationSet(

- "CeoOfCompany", ref _CeoOfCompany, value,

- root => root.Ceo,

- (root, newValue) => root.Ceo = newValue, true);

- }

- }

|

Unit of Work

In order to achieve persistence ignorance, the Unit Of Work implementation hides/wraps the details of the EF4

ObjectContext class. In addition, this encapsulation also has the added benefit of making it really easy to enhance/augment the capabilities of the object context so as to fit one’s particular project needs. In my case, I’ve incorporated the following useful aspects within my Unit of Work implementation:

- When you commit changes, all self-tracking entities will “automagically” be reset to an unmodified state. The alternative would have been for you to manually reset all entities, including child entities referenced via navigation properties in your object graph back to unmodified.

- The unit of work provides a GetManagedEntities method that provides easy access to all entities that are currently managed/attached to the Unit of Work (i.e. the EF4 ObjectContext). This method also makes it easy to filter managed entities by their current modification status (i.e. added/deleted,unmodified/modified).

Its worth mentioning that one *could* also easily extend the Unit Of work implementation to validate all domain objects upon commit (and/or when they are being attached to the Unit of Work). This would certainly provide an easy, automatic and centralised mechanism of ensuring that business logic rules are enforced in your domain. Sounds too easy…?? Well it probably is!! Personally I won’t recommend it as I think validation logic is typically best done at a higher tier in your architecture, such as at a service layer. For example, one would typically want to validate business rules for user supplied data BEFORE commencing any expensive operations – if validation is postponed until commit, then you’ve potentially just wasted a lot of cycles and made your service more susceptible to denial of service attacks. Also consider that sometimes you may not have enough operational contextual information available at the DAL layer to to properly (and efficiently) enforce business rules as the DAL is too low in your tiered architecture to make this easy (e.g. are there workflows/notifications that need to be considered in addition to this save operation?). Still, I mention this because for simple scenarios this may be all you need – see the example below of how one could go about this.

Code Snippet

- public void Commit(bool acceptChanges = true)

- {

- /* One *could* validate modified objects here as follow... although typically this is better done at

- * a service level or higher business tier in your application. */

-

- List<ValidationResults> validationResults = new List<ValidationResults>();

- foreach (var entity in GetManagedEntities<IObjectWithChangeTracker>(ObjectStateConstants.Changed))

- {

- var result = ValidationHelper.Validate(entity);

- if(!result.IsValid)

- {

- validationResults.Add(result);

- }

- }

- if(validationResults.Count > 0)

- {

- throw new ValidationException(validationResults);

- }

-

-

- EntitySession.Context.SaveChanges(acceptChanges ? SaveOptions.AcceptAllChangesAfterSave : SaveOptions.None);

- if(acceptChanges)

- {

- AcceptChanges();

- }

- }

Notice the use of the

ValidationHelper class in line 9. Basically, the Enterprise library validation block only provides a generic

Validate<T>(T object) method. The problem with this is that if you don’t know the type of the object you want to validate at run-time, then this validate method will be of little use as it will only consider validation attributes for the type known at compile time. For example, if

T is say

BasicObject, and you supply an instance of

Employee – only properties in the

BasicObject class will be validated. The

ValidationHelper class overcomes this limitation by simply using reflection to dynamically invoke the enterprise library validation method with the correct type for T.

Running the Tests

To run the included unit tests, please ensure that you modify

app.config in

Sample.TestDataAccessLayer project with a valid sql server 2005/8 connection (SQL Express is fine).

When running the test, it will automatically drop and re-create the schema as per the mappings defined in the

Sample.DataAccessLayer.EF project. The creation of the schema is controlled via the following unit test class initializer -

“Test” specifies the connection string name in app.config, and

true indicates that the database should be dropped/recreated as opposed to simply ensuring that it does exist.

Code Snippet

- [ClassInitialize()]

- public static void MyClassInitialize(TestContext testContext)

- {

- new DataAccessLayerRegistrar("Test").EnsureDatabase(true);

- }

I hope this will help you getting started with this customised version of the EF Self-Tracking framework. There’s certainly a lot more I could have blogged about…but this post is getting much longer than I originally anticipated. Please let me know if there are particular features you wish to discuss in more details in future posts.

Happy coding!

Adriaan