Many of these questions are adressed in the SF documentation provided by Microsoft, and in this post I certainly don’t plan on duplicating this information. Regardless, when I actually got to setup my SF cluster and deploy my applications, I came accross a few unexpected challanges – and this is what I intend to cover here.

In my particular scenario I’m running Service Fabric on premise. If you didn’t realise this was possible, it certainly is and you can find some excellent information here.

Managing multiple Application Environments

This may seem like an obvious question…well maybe! Traditionally, as software developers and IT professionals we’re used to provisioning separate hardware resources for each of our different application environments, like UAT and Production. So, why not just do the same in the Service Fabric world? Well, for starters, the minimum number of Nodes (i.e. machines) supported for a cluster running production workloads is no less than five nodes! That’s quite a few… and in my case, I had 5 environments – so that would mean 25 on-premise machines…that’s just crazy talk!Now, for development purposes, you can run all your nodes on one machine – and this is certainly what happens when you install the Service Fabric SDK on your developer machine – but this is not really suitable for your test environments and I would stongly advise against it. Why? Well, in my experience, I found a lot of developers new to Service Fabric struggle with the notion that services in SF can move from one machine (Node) to another within the cluster – this means that:

a) You shouldn’t use local folder paths in your microservices configurationThus, unless your test environments are configured to run on a multi-machine cluster, these type of bugs are likely going to be left as unfortunate exercises for your end-users…and lets face it…there’s already enough confusing software out there…lets not add to the pile!

b) You shouldn’t use normal standard .Net app/web.config files – it’s quite a big topic, but you can find out more here.

c) You shouldn’t assume or rely on particular 3rd-party software being pre-installed on particular Nodes – if you need this, you’re probably best off using SF Docker containers, hosting it elsewhere…or using setup entry points.

d) If you require special security policy configuration for individual microservices, use the already provided SF policy configuration.

So, getting back to my original question:

Q: How should I deploy multiple environments of the same application?

A: Deploy them as uniquely named applications on the same cluster!

In concept, this is easy…all you have to do is specify multiple Publish Profiles – and unique Application names within each corresponding parameters file. Within your Service Fabric project, the PublishProfiles and ApplicationParameters folders contain xml files corresponding to each of the environments you configured. Below is an example application parameters file for the Production environment – this is where you can define a unique application name per environment:

Now I did say, in concept it’s easy…why? Well, I had a few other surprising things to consider and resolve.<?xml version="1.0" encoding="utf-8"?> <Application Name="fabric:/MyFabricApp-Production" xmlns="…"> <Parameters> <Parameter Name="StatelessService_InstanceCount" Value="-1" /> </Parameters> </Application>

Issue 1: Publishing the same versioned application package multiple times to the same SF Cluster

When you publish a service fabric application in Visual Studio it uses the following powershell script located in the aptly named Scripts folder.I actually ended up using this powershell script in my automated build process too as it makes it easy to manage your application parameters via publish profiles – and the script will automatically read and apply these profile parameters during your deployment.

Note: There are lower level SF Powershell commands you could use – but the one above was fine for my purposes. You can also read more about application configuration for multiple environments here.There is one problem with this Deploy-FabricApplication.ps1 script though and I’ve reported this issue on github. Basically, after a build, the versioned deployment package contains all the publish profiles for all environments. So, assuming your environments are:

- Development

- Staging

- Production

Upon deployment to your Development environment, Service Fabric will first register your 1.0 application package in the Service Fabric image store. Once registered, it will go through its normal process of either upgrading or creating this application.

All fine…except, when it comes to deploying your application to the Staging environment, this script will fail - why? Because this script tries to re-register the same application package version again with the image store – and you can’t have duplicate deployment packages with the same version.

Fortunately, all I had to do is tweak this powershell script with an additional parameter, called $BypassAppRegistration, and add new sections to the script (shown in red) to support a simple Upgrade or Create action without register:

Issue 2: Unique Gateway API ports for each Application Environment

One of my microservices hosted an API layer for consumption by client applications. This API was implemented using Asp.Net Core WebApi and secured using Windows Authentication. Public facing web application endpoints are typically referred to as gateway Apis as they are the ones servicing requests from outside the cluster – and as such, you typically want to expose them via well-known and static URIs. Any other “internal-facing” microservices – whether using Remoting, Http or some other protocol, are typically resolved via the Service Fabric Naming Service, so they don’t need static URIs.When using Asp.Net Core, you can host your web application using either Kestrel or WebListener. Kestrel is not recommended for handling direct internet traffic (that said, if you put a load balancer or reverse proxy in front of it…that’s fine…as long as these front-facing services use a battle tested implementation…e.g. IIS/Http.Sys or nginx). Regardless, in my scenario I required my API to be secured using Windows Authentication – and only WebListener supports that.

Now to configure an endpoint in Service Fabric, it needs to be declared in the ServiceManifest.xml file – Resources section:

The problem I faced is that there was no easy way to configure different ports for each of my different environment gateway API services – and because all my different environments run on the same cluster, this is a showstopper!<Resources> <Endpoints> <Endpoint Protocol="http" Name="ServiceEndpoint" Type="Input" Port="8354" /> </Endpoints> </Resources>

Important: It looks like you can now finally override/configure different ports using parameters – this feature was added sometime after September 2017 so this wasn’t an option at the time for me.In order to allow me to use different ports for my gateway API services, this is what I ended up doing in the Resources section:

Aside: for internal-facing services, you should leave out the Port – Service Fabric will dynamically allocate a random port from the application port ranges specified during the creation of your Service Fabric Cluster.

<Resources> <Endpoints> <!-- This endpoint is used by the communication listener to obtain the port on which to listen. Please note that if your service is partitioned, this port is shared with replicas of different partitions that are placed in your code. --> <Endpoint Protocol="http" Name="ServiceEndpoint-Development" Type="Input" Port="8354" /> <Endpoint Protocol="http" Name="ServiceEndpoint-Uat" Type="Input" Port="8355" /> <Endpoint Protocol="http" Name="ServiceEndpoint-Testing" Type="Input" Port="8356" /> <Endpoint Protocol="http" Name="ServiceEndpoint-Staging" Type="Input" Port="8357" /> <Endpoint Protocol="http" Name="ServiceEndpoint-Production" Type="Input" Port="8358" /> </Endpoints> </Resources>Then, in the Config\Settings.xml file of my service, I added a parameter that allowed me to specify which Endpoint I wished to use:

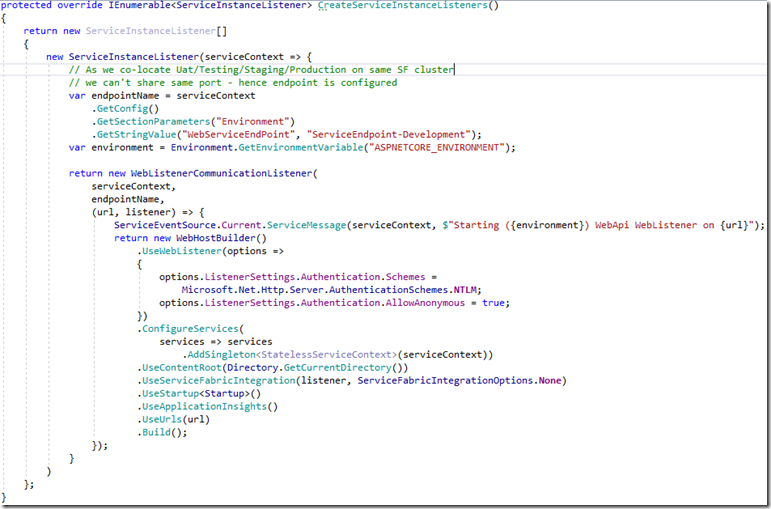

Finally, modify the WebApi.cs file of the API gateway service to use the relevant endpoint name:<?xml version="1.0" encoding="utf-8" ?> <Settings xmlns:xsd="…"> <Section Name="Environment"> <Parameter Name="WebServiceEndPoint" IsEncrypted="false" Value="" /> </Section> </Settings>

... </ConfigOverrides> <EnvironmentOverrides CodePackageRef="Code"> <EnvironmentVariable Name="ASPNETCORE_ENVIRONMENT" Value="[AppEnvironment]" /> </EnvironmentOverrides> </ServiceManifestImport>

Issue 3: Ok…I’ve got a unique URI for my API Gateway…am I done?? Almost!

Although you’re now got a unique port for your gateway microservices, there are a few additional things you need to remember:- Service Fabric is in charge of balancing services across the SF Nodes…and during the life of the application, services can move from one Node to another – so don’t assume a service to remain on a particular Node!

- Microsoft best practice recommends that you deploy gateway API services on all SF Nodes. However, even if you do, a Node can still go down - so clients still should not hardcode the URI to a particular Node

Service Fabric, regardless if deployed on-premise or in Azure – has a built in Reverse Proxy. Basically, you can present the cluster with the following URL (I’ve omitted some of the options for simplicity)

http(s)://<Cluster FQDN | internal IP>:Port/<ServiceInstanceName>/<Suffix path>So, as an example, assuming my reverse proxy is on default port of 19081, my application name is MyApp-Production, my API service name is WebApi with route api/values, then clients can access address your API layer as:

https://uriToMySfCluster.com:19081/MyApp-Production/WebApi/api/values

1 comment:

Hi,

Is this approach still valid with VS2017. I tried publishing with VS2017 to my cluster on Azure but it tries removing the existing application and fails in doing so.

Application Type RedBus and Version 1.0.0 was already registered with Cluster, unregistering it...

2>Unregister-ServiceFabricApplicationType : Application type and version is still in use

Greatly appreciate the help

Post a Comment